Abstract

Three-dimensional Gaussian Splatting (3DGS) has recently emerged as an efficient representation for novel-view synthesis, achieving impressive visual quality. However, in scenes dominated by large and low-texture regions, common in indoor environments, the photometric loss used to optimize 3DGS yields ambiguous geometry and fails to recover high-fidelity 3D surfaces. To overcome this limitation, we introduce PlanarGS, a 3DGS-based framework tailored for indoor scene reconstruction. Specifically, we design a pipeline for Language-Prompted Planar Priors (LP3) that employs a pretrained vision-language segmentation model and refines its region proposals via cross-view fusion and inspection with geometric priors. 3D Gaussians in our framework are optimized with two additional terms: a planar prior supervision term that enforces planar consistency, and a geometric prior supervision term that steers the Gaussians toward the depth and normal cues. We have conducted extensive experiments on standard indoor benchmarks. The results show that PlanarGS reconstructs accurate and detailed 3D surfaces, consistently outperforming state-of-the-art methods by a large margin.

Method

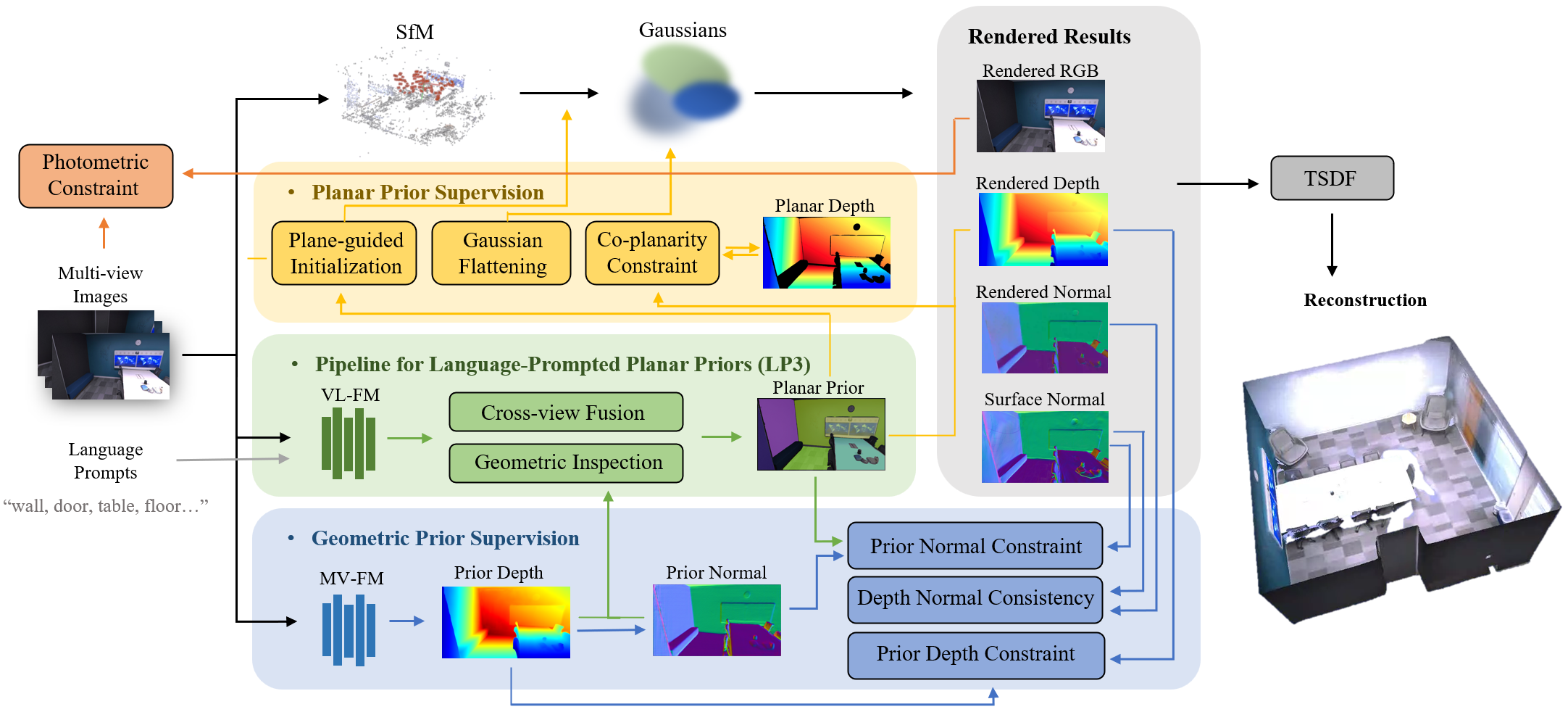

PlanarGS overview. Our method takes multi-view images and language prompts as inputs, getting planar priors through the pipeline for Language-Prompted Planar Priors (LP3). Our planar prior supervision includes plane-guided initialization, Gaussian flattening, and the co-planarity constraint, accompanied by geometric prior supervision. Both foundation models in the figure are pretrained.

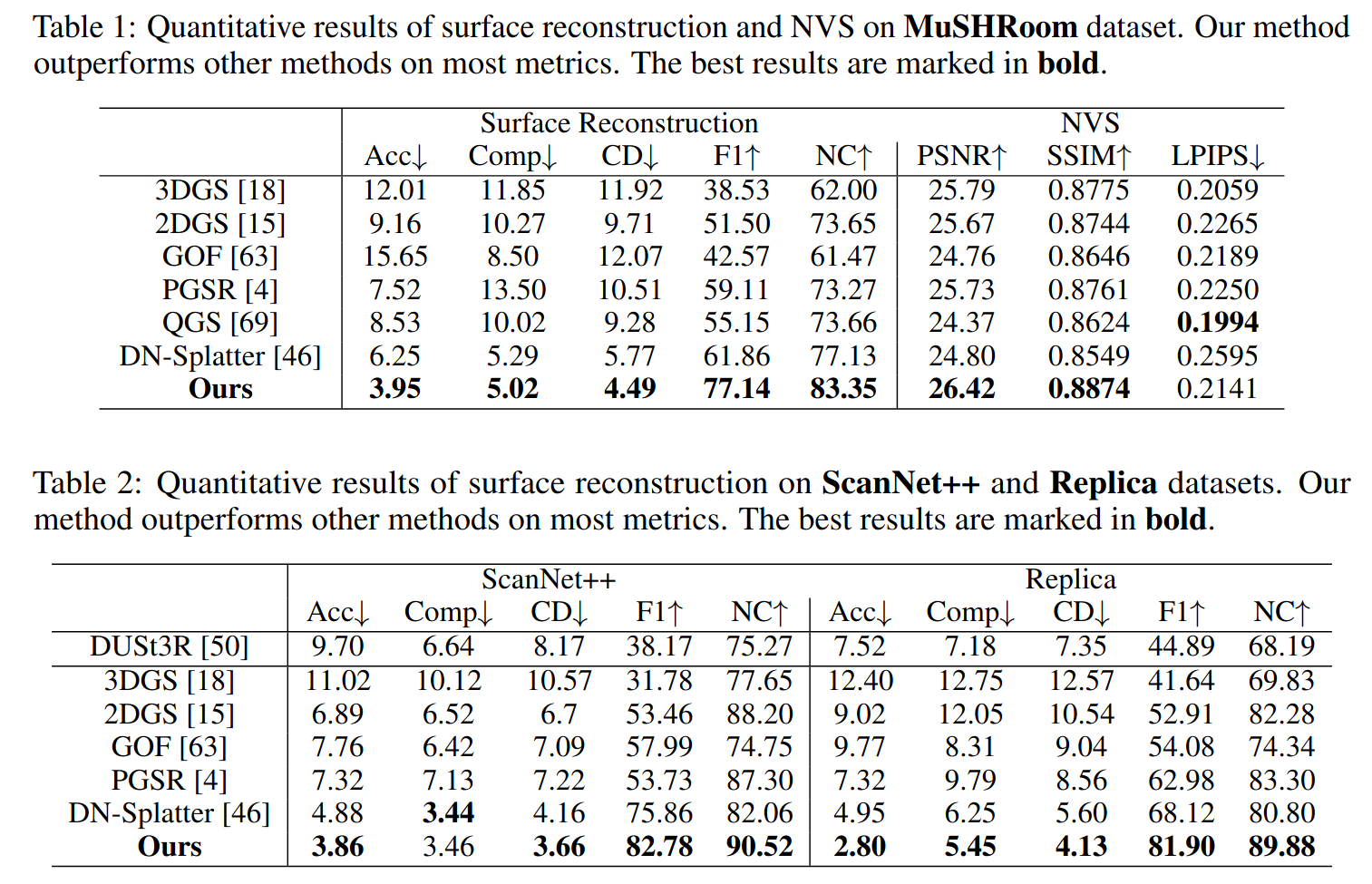

Comparsion

Comparison with others. We conduct our experimental evaluation on 8 scenes from Replica, 4 scenes from ScanNet++ and 5 complex scenes from MuSHRoom. To evaluate the quality of reconstructed surfaces, we report Accuracy (Acc), Completion (Comp), and Chamfer Distance (CD) in cm. F-score (F1) with a 5cm threshold and Normal Consistency (NC) are reported as percentages. For novel view synthesis (NVS), we follow standard PSNR, SSIM, and LPIPS metrics for rendered images. The visualization of the reconstruction can be found at the top of the webpage.